In this article, I will explore how DeepSeek-R1 achieves reasoning capabilities comparable to OpenAI’s closed models using reinforcement learning and innovative distillation techniques. This short analysis examines the model’s architecture, training methodology, and the surprising effectiveness of smaller distilled versions that can run on consumer hardware.

TL;DR: DeepSeek-R1 demonstrates that reinforcement learning without supervised fine-tuning as a preliminary step can achieve reasoning capabilities comparable to OpenAI’s o1. The model uses a MoE architecture with 37B activated parameters (671B total) and achieves 79.8% accuracy on AIME 2024, matching o1’s performance. The training pipeline combines pure RL (DeepSeek-R1-Zero) with cold-start data and iterative fine-tuning, enabling deployment on consumer hardware through distilled versions as small as 1.5B parameters.

Important links:

https://huggingface.co/deepseek-ai/DeepSeek-R1 (original model card)

https://www.deepseek.com/ (Official website)

https://github.com/deepseek-ai/DeepSeek-R1/blob/main/DeepSeek_R1.pdf (technical paper)

https://huggingface.co/deepseek-ai/DeepSeek-R1-Distill-Qwen-1.5B (Distilled model, based on Qwen — 1.5B)

https://huggingface.co/deepseek-ai/DeepSeek-R1-Distill-Qwen-7B (Distilled model, based on Qwen — 7B)

https://huggingface.co/deepseek-ai/DeepSeek-R1-Distill-Qwen-32B (Distilled model, based on Qwen — 32B)

https://ollama.com/library/deepseek-r1 (Ollama DeepSeek R1)

https://unsloth.ai/blog/deepseek-r1 (DeepSeek R1 in Unsloth)

Model architecture

DeepSeek-R1 builds upon the Mixture of Experts (MoE) architecture from its base model DeepSeek-V3, employing a sparse activation pattern where only a subset of parameters is active for any given input. The model contains 671B total parameters, but activates only 37B for each forward pass, making it more efficient than dense models of comparable size.

This architectural choice proves important for handling the computational demands of extended reasoning chains. The MoE routing system learns to specialize different experts for various aspects of the reasoning process — from mathematical computation to logical deduction to natural language generation. This specialization enables the model to maintain high performance while keeping computational costs manageable, as evidenced by its ability to handle sequences up to 128K tokens in length.

The architecture’s efficiency becomes apparent in the model’s ability to generate thousands of reasoning tokens per response while maintaining coherence and accuracy throughout extended chains of thought.

Implementation overview

The core innovation in DeepSeek-R1 lies in its training approach. Instead of relying on supervised fine-tuning, the initial model (DeepSeek-R1-Zero) uses pure reinforcement learning to develop reasoning capabilities. This approach begins with the base model and employs Group Relative Policy Optimization (GRPO), eliminating the need for a separate critic model.

The GRPO implementation uses a reward function that combines accuracy and format adherence:

def compute_reward(response, ground_truth):

accuracy_reward = evaluate_correctness(response, ground_truth)

format_reward = check_formatting(response)

return accuracy_reward + format_reward * format_weightTraining pipeline

The training process consists of four distinct phases. The initial phase applies RL directly to the base model, generating DeepSeek-R1-Zero. This model achieves a 71.0% accuracy on AIME 2024, demonstrating that pure RL can develop reasoning patterns.

The second phase introduces cold-start data, incorporating thousands of manually curated examples to improve readability and consistency. The training template enforces a specific structure:

template = """

A conversation between User and Assistant. The user asks a question,

and the Assistant solves it.

The assistant first thinks about the reasoning process in the mind

and then provides the user with the answer.

The reasoning process and answer are enclosed within

and tags.

"""Performance analysis

DeepSeek-R1’s performance matches or exceeds OpenAI’s o1 across multiple benchmarks:

AIME 2024: 79.8% (o1: 79.2%)

MATH-500: 97.3% (o1: 96.4%)

Codeforces rating: 96.3% (o1: 96.6%)

MMLU: 90.8% (o1: 91.8%)

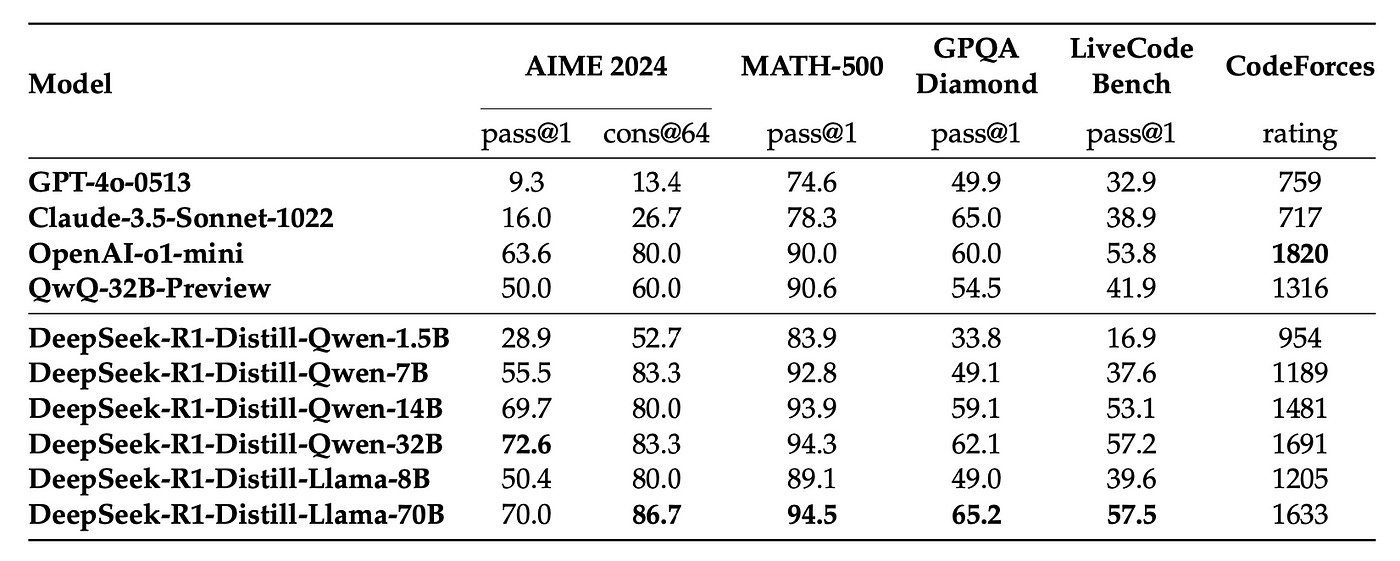

The distilled versions maintain impressive performance even at smaller scales. The 32B parameter version achieves 72.6% on AIME 2024, significantly outperforming other open-source models of similar size, see the table below:

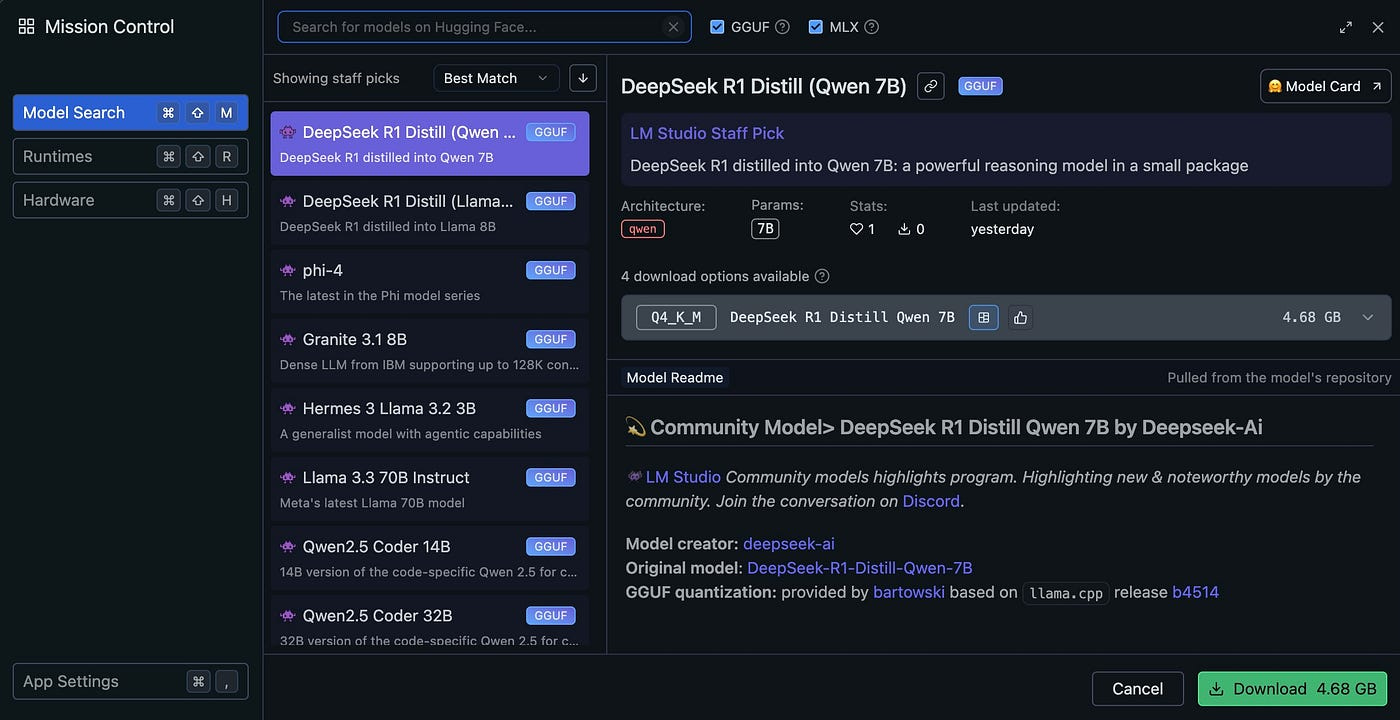

Hardware considerations

While the full model requires substantial computing resources, the distilled versions enable deployment on consumer hardware:

DeepSeek-R1-Distill-Qwen-1.5B: Runs on single consumer GPU (check Ollama and LM studio versions)

DeepSeek-R1-Distill-Qwen-7B: Requires ~20GB VRAM

DeepSeek-R1-Distill-Qwen-32B: Optimal with 2–4 GPUs

Discussion

DeepSeek-R1’s approach represents a significant shift in training methodology. The success of RL in developing reasoning capabilities challenges the conventional wisdom that supervised fine-tuning is necessary. The distillation results suggest that the field may be overestimating the parameters needed for strong reasoning performance.

The most promising aspect is the demonstration that smaller, distilled models can maintain much of the reasoning capabilities of their larger counterparts. This opens the possibility for widespread deployment of reasoning-capable models on consumer hardware, potentially democratizing access to advanced AI capabilities.

However, the challenge of language mixing and the need for cold-start data indicate that pure RL may not be sufficient for all aspects of model development. Future work should focus on developing hybrid approaches that combine the benefits of RL with more structured learning methods.

Conclusion

DeepSeek-R1 has the potential to fundamentally shift how we think about training large language models. Its success challenges three core assumptions in the field: that supervised fine-tuning is necessary for advanced reasoning, that massive parameter counts are required for top-tier performance, and that high-quality reasoning capabilities must remain in the domain of closed, proprietary models.

Originally published at https://unfoldai.com on January 21, 2025.

Connect with me on LinkedIn and GitHub, where I keep sharing such free content to become more productive at building ML systems.

Follow me on X (Twitter) and Medium to get instant notifications for everything new.

Join my YouTube channel for upcoming insightful content.

Subscribe to the UnfoldAI magazine in Substack.

Check my special content on my website.